Introduction

Picture this: you've built a masterpiece of code, a digital marvel that works flawlessly on your machine, And the moment it ventures out into the wild world of production servers or your colleague's computer, it stumbles and falters like a sleepy marathon runner. Sound familiar? Ah, the classic developer's woe - "It works on my machine!" Fear not, as Docker is here to save the day!

Imagine a magical box, a container that wraps up your entire code, its dependencies, configurations, and even a sprinkle of networking magic. Docker turns this fantasy into reality, making your creations portable, consistent, and ready to dazzle across any stage, from your laptop to the grandest server.

No more wrestling with installation headaches – Docker even makes sharing your "containerized" creations as easy as posting a status update. In a nutshell, Docker is your code's best travel companion, ensuring that "It works on my machine" transforms into "It works, everywhere!".

🤔 So what is Docker again?

Docker is a containerization platform that enables the creation, deployment, and management of lightweight, isolated software environments known as containers. Don't worry we will talk about containers in a brief ahead.

Docker Fundamentals

I hope you've gained a basic understanding of Docker by now. It's time to delve into the core concepts surrounding Docker and the world of containerization. In the upcoming sections, we'll explore these fundamental concepts in greater detail.

📦 Containers

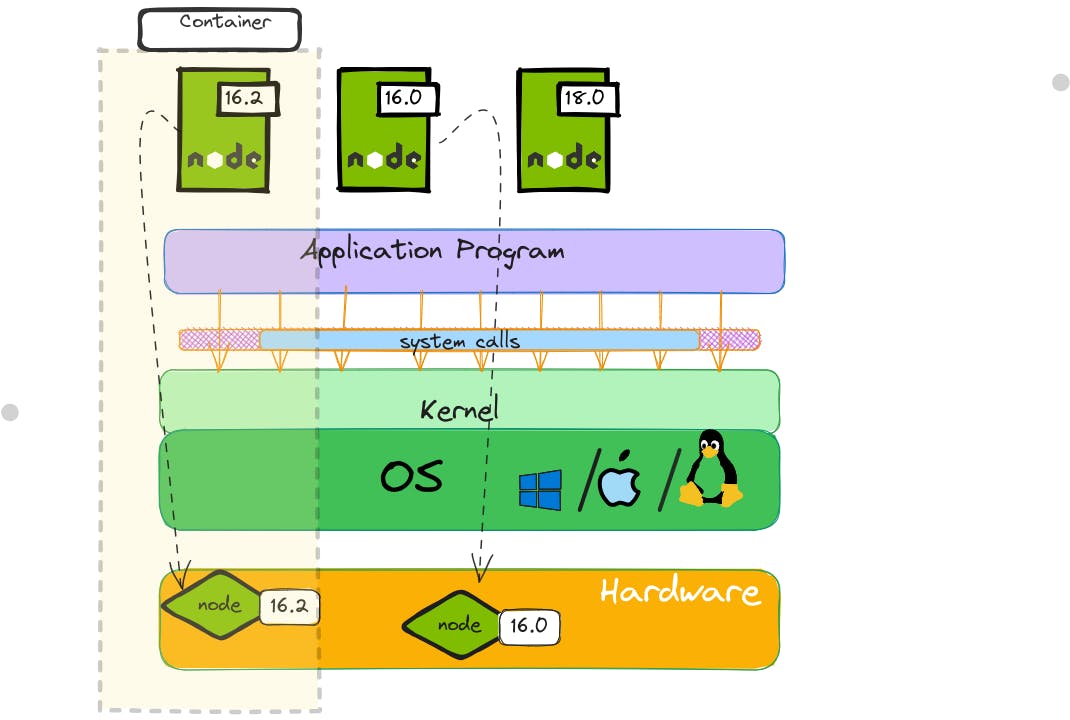

To better understand the idea we will refer to the diagram below, otherwise just imagining things is not a good idea.

Let's consider the example of Node.js, the popular JavaScript runtime. Picture your hard disk as a canvas where different versions of Node are painted. You might have Node 16.0, 16.2, and 18.0 all coexisting. These versions represent various tools you can use to build applications. Now, suppose your existing application is tailored to run on Node 16.0. Everything works flawlessly. However, to test a newer version, say Node 16.2, you'd ideally like a separate environment that won't interfere with your existing setup.

This is where namespacing comes in. Think of it as a way to create virtual compartments within your hard disk, each holding a distinct version of Node. Each compartment, or namespace, remains isolated from the others, allowing you to install different Node versions without worrying about conflicts.

See the yellow shaded area in the diagram below

Now, let's bridge the gap between namespacing and containers. In the world of containers, each version of Node is like a mini-universe. It's not just the Node binary; it's an encapsulated environment that includes the necessary libraries, dependencies, and even some chunks of the operating system and system calls. Imagine this environment as a box, self-sufficient and sealed off from the external world. This box is a container.

In the diagram itself, you see we have a container holding versions of Node, like 16.2 and 16.0, neatly separated, simillarly we can have multiple such containers. Each container contains everything needed to run a Node application. If you were to upgrade your application to use Node 16.2, you could confidently run it within its designated container. Your application wouldn't be affected by external changes because it's enclosed within its own isolated space. This isolation ensures that even if you need to install multiple Node versions for different projects, they won't interfere with each other.

So far we understood that containers are like wizards of isolation, creating safe spaces for applications to play in, making sure they behave the same no matter where they're put. This streamlines testing, launching, and growing apps, banishing the "It works on my computer" headache. But wait, there's more! Our journey isn't over.

Next stop: understanding how containers differ from virtual machines and why these modern containers, especially with Docker, are superstars in the tech world.

💻 Virtual Machines

Now we have an understanding of containers so let's see what are virtual machines,

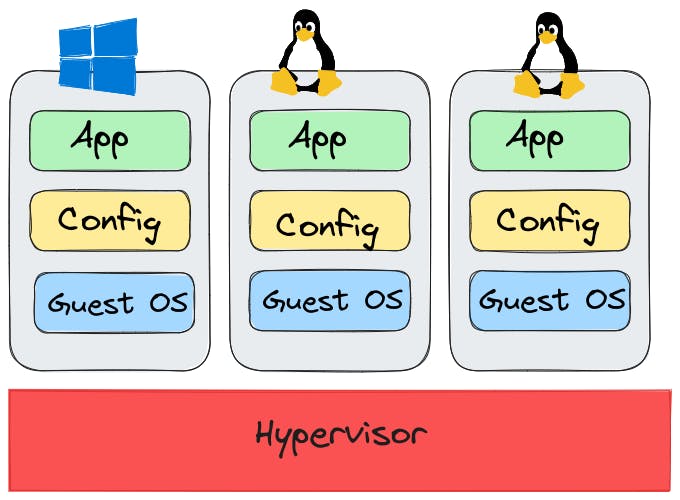

In the diagram presented below, a virtual machine configuration on a production server is depicted. At the core of this setup is a hypervisor, (A hypervisor is like a virtual manager for your computer. It creates "virtual machines") acting as a mediator between the physical hardware and the virtual machines. The hypervisor enables the creation of multiple isolated environments, each represented by a box. Within these boxes, we find three main components: the application, configurations, and the guest operating system

Now, let's delve into the specifics of virtual machines. As the name is pretty much self-explanatory, imagine each box as an independent computer within the server, running its full-fledged operating system. This includes all the necessary software, drivers, and settings. The application, accompanied by its configurations, is ensconced within this virtual environment. However, this approach has some inherent drawbacks. Virtual machines are heavy in terms of resource consumption. Since each virtual machine requires its complete operating system, it can strain the server's memory, processing power, and storage. This can lead to inefficiencies and limitations, particularly when scaling or optimizing resources.

In contrast to traditional namespace containers, which leveraged the shared operating system approach to create separate environments, virtual machines offer strong isolation due to their complete system setup. Each virtual machine essentially runs an independent instance of an operating system, affording high security and ensuring minimal interference between applications.

Despite these challenges, virtual machines offer some distinct advantages some of them are :

Strong Isolation: Virtual machines excel in offering robust isolation, making them well-suited for scenarios where security and separation between applications are paramount.

Operating System Diversity: Virtual machines can run various operating systems concurrently. This capability proves valuable for accommodating legacy software and creating specialized environments as needed.

Independence: Each virtual machine operates as an autonomous entity with its complete system setup. This autonomy provides flexibility for software installations and configurations, enabling customization to suit specific application requirements.

🐳 Docker Container

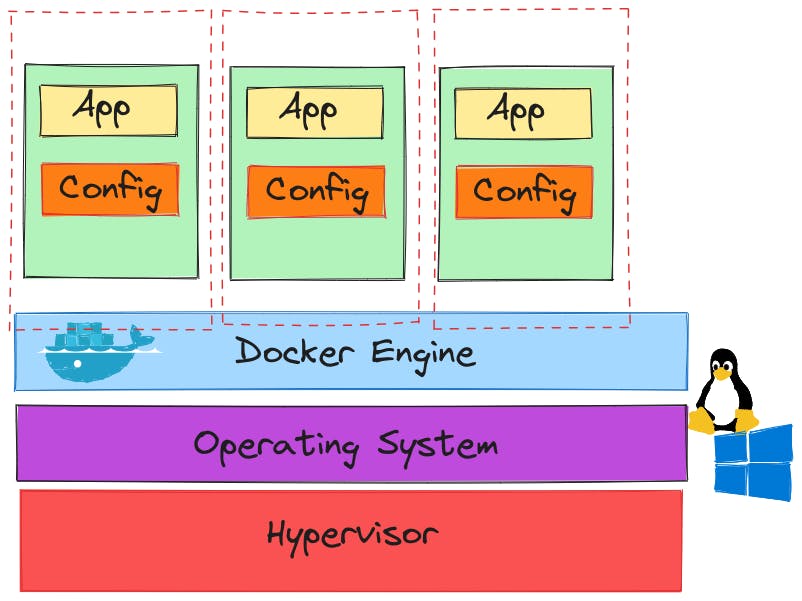

As technology evolved, the concept of containers gained prominence, introducing a more lightweight alternative to virtual machines. Unlike virtual machines, which require a full operating system, Docker containers leverage the host system's kernel while isolating the application's runtime environment. This drastically reduces resource overhead and accelerates deployment times. In the following section, we'll explore Docker containers in detail and examine how they address some of the limitations posed by traditional virtual machines and the namespace containers.

See in the diagram above, we introduce a new layer above the operating system: the "Docker engine." This addition marks a transformative leap in how we manage and deploy applications. The Docker engine serves as a pivotal player, reshaping the landscape of application containerization, a method for packaging applications and their dependencies with remarkable efficiency. In contrast to the conventional approach of running full-fledged virtual machines, the Docker engine provides a modern solution that combines the benefits of both lightweight namespace containerization and traditional virtualization.

But we have already seen namespace containers then why do we need docker?

While we've explored the concept of namespace containers, let's delve deeper into the world of Linux namespaces and another essential component, Cgroups. These building blocks laid the foundation for containerization, but challenges emerged that Docker would eventually resolve.

So the concept of namespaces was introduced in the Linux kernel in 2002 and originally we had 6 namespaces -

User Namespaces: Isolate user and group IDs, providing user identity separation within a namespace.

PID: Create independent process ID spaces, allowing processes to have unique IDs within each namespace.

Network Namespaces: Segment network resources, including interfaces and routing tables, for isolated network communication.

Mount Namespaces: Isolate filesystem mounts, enabling different views of the filesystem hierarchy within separate namespaces.

UTS Namespaces: (Unix Timesharing System) Isolate the system's hostname and domain name, providing separate identification values within namespaces.

IPC: (Inter-Process Communication) Provide isolated inter-process communication mechanisms, like message queues and semaphores, for processes within a namespace.

Later on, the 7th Namespace was introduced known as Cgroups short for Control Groups, a Linux kernel feature that allows fine-grained resource management and resource allocation for processes and process groups. Cgroups enable administrators to impose limits and prioritize resources such as CPU, memory, I/O, and network bandwidth among different processes or groups of processes running on a system. In essence, Cgroups provide a way to control and distribute resources across processes, preventing one process or group of processes from monopolizing system resources and causing performance degradation or instability.

Before Docker became popular, using namespaces and Cgroups for containers was like trying to coordinate a musical performance without a conductor. While these technologies had the potential to isolate and manage resources, making them work together was complicated and needed a lot of hands-on work.

This is where Docker shines. It encapsulates namespaces and Cgroups within a unified engine, offering the best of both worlds. Docker handles the intricate management of namespaces, while Cgroups ensure each container's demands are met without crossing boundaries. It simplifies the orchestration, making containerization accessible and efficient.

So, to conclude all the concepts we can simply say that Docker takes these complex parts - like namespaces that keep things separate and Cgroups that control resources - and puts them together smoothly. It's like a skilled conductor leading a group of musicians, making Docker the magic key to make containers work well. With Docker, your applications get to enjoy being separate in their own space, while all the tricky parts of making that happen are handled behind the scenes.

Furthermore, the Docker engine streamlines the process of creating, sharing, and managing these containers. It uses a powerful concept called "images" (we will see later on in detail ) that encapsulates the instructions needed to build a container. This not only makes it easy to share applications but also guarantees that they work consistently across different environments.

Conclusion:

Concluding our dive into containers and their transformative role in modern software deployment, we've discovered how they reshape application handling, ensuring efficiency and consistency. With Docker as our guiding light, development and deployment are now more potent and streamlined. As we set our sights on upcoming topics, our next blog will delve into the intricacies of Docker Engine's inner workings. We'll navigate the world of working with containers, uncovering the heart of this transformational technology. Brace yourself for the upcoming chapter, as we venture even further into the realm of Docker.